The Summer of Fake AI Novels

Some newspapers around the country, including the Chicago Sun-Times and at least one edition of The Philadelphia Inquirer, have published a syndicated summer book list that includes made-up books. Only five of the 15 titles on the list are real.

Some newspapers around the country, including the Chicago Sun-Times and at least one edition of The Philadelphia Inquirer, have published a syndicated summer book list that includes made-up books. Only five of the 15 titles on the list are real.

Of the books named on this reading list, Brit Bennet, Isabel Allende, Andy Weir, Taylor Jenkins Reid, Min Jin Lee, Rumaan Alam, Rebecca Makkai, Maggie O'Farrell, Percival Everett, and Delia Owens' titles are all books that do not exist. That doesn't mean the authors don't exist. For example, Percival Everett is a well-known author, and his novel, James, just won the Pulitzer Prize, but he never wrote a book called The Rainmakers, supposedly set in a "near-future American West where artificially induced rain has become a luxury commodity." Chilean American novelist Isabel Allende never wrote a book called Tidewater Dreams, which was described as the author's "first climate fiction novel."

Ray Bradbury wrote the wonder-filled summer novel, Dandelion Wine and Jess Walter wrote Beautiful Ruins, and Françoise Sagan wrote Bonjour Tristesse.

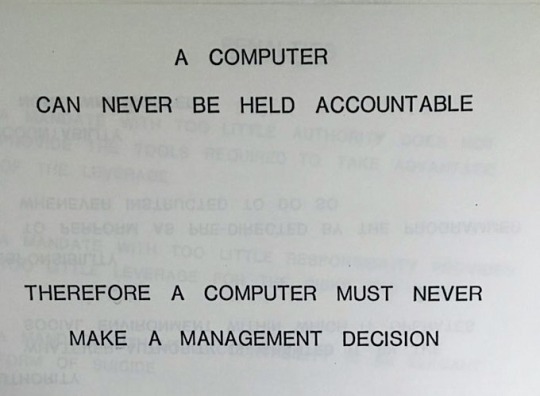

The list was part of licensed content provided by King Features, a unit of the publisher Hearst Newspapers, but the Sun-Times did not check it before publishing. So, who is most accountable for the error? Writer Marco Buscaglia has claimed responsibility for it. He says it was partly generated by AI. and told NPR, "Huge mistake on my part and has nothing to do with the Sun-Times. They trust that the content they purchase is accurate, and I betrayed that trust. It's on me 100 percent."

The fake summer reading list is dated May 18, two months after the Chicago Sun-Times announced that 20% of its staff had accepted buyouts "as the paper's nonprofit owner, Chicago Public Media, deals with fiscal hardship."

Another education question is how, where, and why AI created this list? Where was it getting its information? How did it come up with detailed descriptions, such as on set in a "near-future American West where artificially induced rain has become a luxury commodity" for books that are not out there? How and why did it think these were real books?

Sounds like a good AI lesson to have students investigate and learn how AI operates and why it often creates "hallucinations" (errors and factual mistakes).