LinkedIn's Economic Graph

I wrote earlier about LinkedIn Learning, a new effort by the company to market online training. I said then that I did not think this would displace higher education any more than MOOCs or online education. If successful, it will be disruptive and perhaps push higher education to adapt sooner.

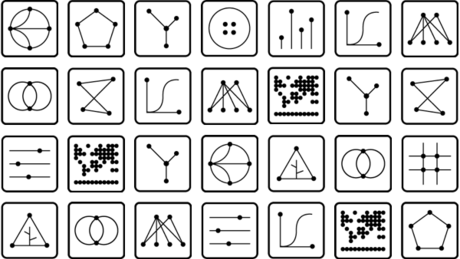

LinkedIn’s vision is to build what it calls the Economic Graph. That graph will be created using profiles for every member of the work force, every company, and "every job and every skill required to obtain those jobs."

That concept reminded me immediately of Facebook's Social Graph. Facebook introduced the term in 2007 as a way to explain how the then new Facebook Platform would take advantage of the relationships between individuals to offer a richer online experience. The term is used in a broader sense now to refer to a social graph of all Internet users.

LinkedIn Learning is seen as a service that connects user, skills, companies and jobs. LinkedIn acknowledges that even with about 9,000 courses on their Lynda.com platform they don't have enough content to accomplish that yet.

They are not going to turn to colleges for more content. They want to use the Economic Graph to determine the skills that they need content to provide based on corporate or local needs. That is not really a model that colleges use to develop most new courses.

But Lynda.com content are not "courses" as we think of a course in higher ed. The training is based on short video segments and short multiple-choice quizzes. Enterprise customers can create playlists of content modules to create something course-like.

One critic of LinkedIn Learning said that this was an effort to be a "Netflix of education." That doesn't sound so bad to me. Applying data science to provide "just in time" knowledge and skills is something we have heard in education, but it has never been used in any broad or truly effective way.

The goal is to deliver the right knowledge at the right time to the right person.

One connection for higher ed is that the company says it is launching a LinkedIn Economic Graph Challenge "to encourage researchers, academics, and data-driven thinkers to propose how they would use data from LinkedIn to generate insights that may ultimately lead to new economic opportunities."

Opportunities for whom? LinkedIn or the university?

This path is similar in some ways to instances of adaptive-learning software that responds to the needs of individual students. I do like that LinkedIn Learning also is looking to "create" skills in order to fulfill perceived needs. Is there a need for training in biometric computing? Then, create training for it.

You can try https://www.linkedin.com/learning/. When I went there, it knew that I was a university professor and showed me "trending" courses such as "How to Teach with Desire2Learn," "Social Media in the Classroom" and "How to Increase Learner Engagement." Surely, the more data I give them about my work and teaching, the more specific my recommendations will become.

d on Coursera

d on Coursera