Edge Computing

I learned about edge computing a few years ago. It is a method of getting the most from data in a computing system by performing the data processing at the "edge" of the network. The edge is near the source of the data, not at a distance. By doing this, you reduce the communications bandwidth needed between sensors and a central datacenter. The analytics and knowledge generation are right at or near the source of the data.

The cloud, laptops, smartphones, tablets and sensors may be new things but the idea of decentralizing data processing is not. Remember the days of the mainframe computer?

The mainframe is/was a centralized approach to computing. All computing resources are at one location. That approach made sense once upon a time when computing resources were very expensive - and big. The first mainframe in 1943 weighed five tons and was 51 feet long. Mainframes allowed for centralized administration and optimized data storage on disc.

Access to the mainframe came via "dumb" terminals or thin clients that had no processing power. These terminals couldn't do any data processing, so all the data went to, was stored in, and was crunched at the centralized mainframe.

Much has changed. Yes, a mainframe approach is still used by businesses like credit card companies and airlines to send and display data via fairly dumb terminals. And it is costly. And slower. And when the centralized system goes down, all the clients go down. You have probably been in some location that couldn't process your order or or access your data because "our computers are down."

It turned out that you could even save money by setting up a decentralized, or “distributed,” client-server network. Processing is distributed between servers that provide a service and clients that request it. The client-server model needed PCs that could process data and perform calculations on their own in order to have applications to be decentralized.

Google Co-Founder Sergey Brin shows U.S. Secretary of State John Kerry the computers inside one of

Google's self-driving cars - a data center on wheels. June 23, 2016. [State Department photo/ Public Domain]

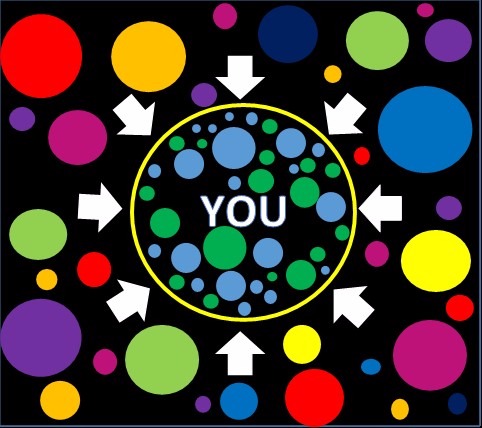

Add faster bandwidth and the cloud and a host of other technologies (wireless sensor networks, mobile data acquisition, mobile signature analysis, cooperative distributed peer-to-peer ad hoc networking and processing) and you can compute at the edge. Terms like local cloud/fog computing and grid/mesh computing, dew computing, mobile edge computing, cloudlets, distributed data storage and retrieval, autonomic self-healing networks, remote cloud services, augmented reality and more that I haven't encountered yet have all come into being.

Recently, I heard a podcast on "Smart Elevators & Self-Driving Cars Need More Computing Power" that got me thinking about the millions of objects (Internet of Things) connecting to the Internet now. Vehicles, elevators, hospital equipment, factory machines, appliances and a fast-growing list of things are making companies like Microsoft and GE put more computing resources at the edge of the network.

This is computer architecture for people not things. In 2017, there were about 8 billion devices connect to the net. It is expected that in 2020 that number will be 20 billion. Do you want the sensors in your car that are analyzing traffic and environmental data to be sending it to some centralized resource - or doing it in your car? Milliseconds matter in avoiding a crash. You need the processing to be done on the edge. Cars are "data centers on wheels."

Remember the early days of the space program? All the computing power was on Earth. You have no doubt heard the comparison that the iPhone in your pocket has hundreds or even thousands of times the computing power of the those early spacecraft. That was dangerous, but it was the only option. Now, much of the computing power is at the edge - even if the vehicle is also at the edge of our solar system. And things that are not as far off as outer space - like a remote oil pump - also need to compute at the edge rather than needing to connect at a distance to processing power.

Plan to spend more time in the future at the edge.

I'll be moderating a track next week at NJEdge.Net's Annual Conference:

I'll be moderating a track next week at NJEdge.Net's Annual Conference:  When it comes to teachers and technologies, the battle cry of Virginia Tech professor

When it comes to teachers and technologies, the battle cry of Virginia Tech professor  Wayne Brown, CEO and Founder of Center for Higher Ed CIO Studies (CHECS), will talk in his session on longitudinal higher education CIO research and the importance of technology leaders aligning technology innovations and initiatives with the needs of the higher education institution. His two-part survey methodology enables him to compare and contrast multiple perspectives about higher education technology leaders. The results provide essential information regarding the experiences and background an individual should possess to serve as a higher education CIO. In collaboration with NJEdge, Wayne will collect data from NJEdge higher education CIOs and will compare the national results with those of the NJ CIOs.

Wayne Brown, CEO and Founder of Center for Higher Ed CIO Studies (CHECS), will talk in his session on longitudinal higher education CIO research and the importance of technology leaders aligning technology innovations and initiatives with the needs of the higher education institution. His two-part survey methodology enables him to compare and contrast multiple perspectives about higher education technology leaders. The results provide essential information regarding the experiences and background an individual should possess to serve as a higher education CIO. In collaboration with NJEdge, Wayne will collect data from NJEdge higher education CIOs and will compare the national results with those of the NJ CIOs. Timothy Renick (a man of many titles: Vice President for Enrollment Management and Student Success, Vice Provost, and Professor of Religious Studies at Georgia State University) is talking about "Using Data and Analytics to Eliminate Achievement Gaps." The student-centered and analytics-informed programs at GSU has raised graduation rates by 22% and closed all achievement gaps based on race, ethnicity, and income-level. It now awards more bachelor’s degrees to African Americans than any other college or university in the nation. Through a discussion of innovations ranging from chatbots and predictive analytics to meta-majors and completion grants, the session covers lessons learned from Georgia State’s transformation and outlines several practical and low-cost steps that campuses can take to improve outcomes for underserved students.

Timothy Renick (a man of many titles: Vice President for Enrollment Management and Student Success, Vice Provost, and Professor of Religious Studies at Georgia State University) is talking about "Using Data and Analytics to Eliminate Achievement Gaps." The student-centered and analytics-informed programs at GSU has raised graduation rates by 22% and closed all achievement gaps based on race, ethnicity, and income-level. It now awards more bachelor’s degrees to African Americans than any other college or university in the nation. Through a discussion of innovations ranging from chatbots and predictive analytics to meta-majors and completion grants, the session covers lessons learned from Georgia State’s transformation and outlines several practical and low-cost steps that campuses can take to improve outcomes for underserved students. Greg Davies' topic is "The Power of Mobile Communications Strategies and Predictive Analytics for Student Success and Workforce Development." The technology that has been used to transform, to both good and bad ends, most other major industries can connect the valuable resources available on campus to the students who need them most with minimal human resources. Technology has been used to personalize the digital experience in such industries as banking, retail, information and media, and others by reaching consumers via mobile technology. Higher Education has, in some cases, been slow to adapt innovative and transformative technology. Yet, its power to transform the student engagement and success experience has been proven. With the help of thought leaders in industry and education, Greg discusses how the industry can help achieve the goal of ubiquity in the use of innovative student success technologies and predictive data analytics to enable unprecedented levels of student success and, as a consequence, workforce development.

Greg Davies' topic is "The Power of Mobile Communications Strategies and Predictive Analytics for Student Success and Workforce Development." The technology that has been used to transform, to both good and bad ends, most other major industries can connect the valuable resources available on campus to the students who need them most with minimal human resources. Technology has been used to personalize the digital experience in such industries as banking, retail, information and media, and others by reaching consumers via mobile technology. Higher Education has, in some cases, been slow to adapt innovative and transformative technology. Yet, its power to transform the student engagement and success experience has been proven. With the help of thought leaders in industry and education, Greg discusses how the industry can help achieve the goal of ubiquity in the use of innovative student success technologies and predictive data analytics to enable unprecedented levels of student success and, as a consequence, workforce development. There is a lot of talk about distraction these days. The news is full of stories about the Trump administration and the President himself creating distractions to keep the public unfocused on issues they wish would go away (such as the Russias connections) and some people believe the President is too easily distracted by TV news and Twitter.

There is a lot of talk about distraction these days. The news is full of stories about the Trump administration and the President himself creating distractions to keep the public unfocused on issues they wish would go away (such as the Russias connections) and some people believe the President is too easily distracted by TV news and Twitter. A few books I have read discuss the ways in which distraction can interfere with learning. In

A few books I have read discuss the ways in which distraction can interfere with learning. In And the final book on my distraction shelf is

And the final book on my distraction shelf is  Like HTTP, which your browser uses to communicate with websites, BitTorrent is just a protocol. People were sharing pirated files of all kinds before BitTorrent using

Like HTTP, which your browser uses to communicate with websites, BitTorrent is just a protocol. People were sharing pirated files of all kinds before BitTorrent using