How Blue Is the Sky at Bluesky and Other Alternatives to X

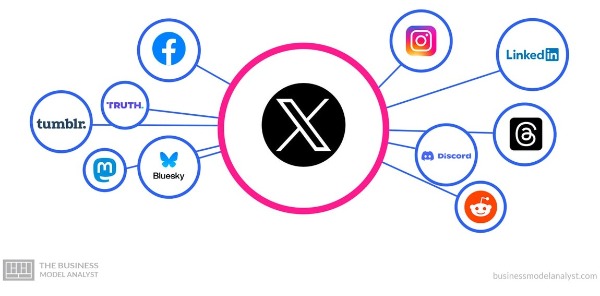

For more than 18 years, the social network X (which many of us still call Twitter) has dominated short message social media for almost 20 years. Many people still go there for real-time updates, breaking news, and conversations. But - and it is a big BUT - changes due to Elon Musk's management of the platform the past few years have had many users leave.

People are concerned about the lack of content moderation, reduced privacy protections, and subscription-based features (like paid verification). The general gripe is that it is an ugly space full of hate and misinformation. Users have sought out alternatives to X, and that meant there was a new user demand to be filled by other entrepreneurs.

Alternatives range from decentralized options like Mastodon to community-driven spaces like Bluesky. I have several clients who wanted to move off X to "something else" and asked me for advice over the past year. Friends have said to me, "You're still on Twitter? Why?"

The alternative most often mentioned is Bluesky. Interestingly, it was founded by Twitter co-creator Jack Dorsey. Bluesky initially began in 2019 as a project within Twitter to develop an open social protocol that would allow multiple apps to operate seamlessly. It was spun off as an independent entity in 2021. Its claim and appeal is that it offers algorithmic transparency and user control, enabling individuals to tailor their social media experience.

Is Bluesky a return to a gentler, saner social-media experience? It does feel like the Twitter of a decade ago. It's not the 2017 Twitter that was full of political Trump and anti-Trump pollution.

Recently, I created an account just to see what it's all about. It looks like Twitter. It even has a winged blue logo (a butterfly instead of a bird) and a character limit on posts. I am cautiously proceeding, following only a few. I have already read complaints that it has "MAGA trolls" and complaints by those accounts that they have been blocked. Is it liberal-left? The most followed account belongs to Representative Alexandria Ocasio-Cortez (AOC).

Cory Doctorow coined the term “enshittification” to describe how social-media companies make changes that benefit them, but gradually, and almost inevitably, the user experience degrades. I suspect that more than just the user experience degrades.The content degrades.

Facebook and X have both been criticized of late for burying some news by deprioritizing links to articles. Instagram and Pinterest have been filling my feeds with some crazy, irrelevant AI-generated content. Sometimes my Instagram feed is 75% ads and people I don't follow. Where are the things and people I selected to follow?

What are the other alternatives in the microblogging world of social media?

Threads is Meta’s entry into the space. It's their "Twitter-killer," but Twitter survives. It allows users to post text updates, images, and videos, engage with hashtags, and interact through likes, comments, and reposts. While Threads functions as a standalone app, it is seamlessly integrated with Instagram, allowing users to sign up easily and access it through a tab within the Instagram app. It also encourages you to share your Instagram posts to Threads with a click. I use both, and I do sometimes share, but that seems repetitive. Still, my "audience" in Threads may be different from Instagram, though there is some overlap. Meta integration led to a staggering 100 million sign-ups within its first week, as Instagram users checked out this new social network. Threads' ad-free interface, clean design, and connection to Instagram’s ecosystem make it appealing, and so far, it is not as polluted as X. It is the text option paired with Instagram's visual option. A good idea for Meta.

I also added the Substack platform this year. It offers writers, journalists, and creators a space for short-form updates and community engagement. It is more blogging than microblogging, and some people write quite long pieces. It offers the option of having free or paid subscribers. Like Medium, that leads to the frustration of clicking on an interesting title and hitting the paywall. Substack Notes is their complement to the subscription-based model. Notes allows users to post microblogs, share snippets of their work, restack content they enjoy, and tag others to spark conversations.

On the plus side, Substack Notes focuses on more meaningful content rather than algorithm-driven visibility. As of now, it seems to be prioritizing quality over reach. Public by default, Notes appear in the feeds of followers and subscribers, allowing creators to build relationships while promoting their work. Though it lacks advanced interactivity or paywall options for full posts, Substack Notes is ideal for content-focused users seeking an integrated platform for sharing ideas and monetizing their audience.

I am feeling old because I am still using LinkedIn and Tumblr.

For professionals seeking a viable Twitter replacement, LinkedIn stands out as the one social media platform that seems "professional" and is tailored for networking, career growth, and industry engagement. Unlike microblogging platforms like X, LinkedIn emphasizes professional connections, enabling users to share updates, articles, and career insights in a format similar to tweets but with a focus on meaningful business discussions. As a social network, LinkedIn excels at fostering connections through its intuitive "connect" feature, where users can expand their network by engaging with mutual contacts and industry leaders. With its robust job postings, company pages, and professional tools, LinkedIn offers a structured alternative to platforms like Mastodon, Reddit, or Threads for those prioritizing career-focused interactions over casual content.

Though Tumblr is quite a different platform from X, it offers far more creative ways to share ideas and connect with others online. It combines blogging with an emphasis on visual storytelling (like Instagram) and allows users to post quite long text updates, images, GIFs, videos, and links. This separates it from most of the others. It really is a micro- or mini-blog platform.

Unlike the fast-paced, real-time interactions of X, you won't get the "news" here. Tumblr focuses on creativity and individuality, with customizable themes. What you may get is a lot of celebrity photos and nudity, even though they tried (unsuccessfully) to purge that content a few years ago.

Ghost students, as their name implies, aren’t real people. They are not spectral visions. Had you asked me earlier to define the term, I would have said it is a way to describe a student who is enrolled in a college or university but does not actively participate in classes or academic activities. However, these new ghosts are aliases or stolen identities used by scammers and the bots they deploy to get accepted to a college, but not for the purpose of attending classes or earning a degree. Why? What's the scam?

Ghost students, as their name implies, aren’t real people. They are not spectral visions. Had you asked me earlier to define the term, I would have said it is a way to describe a student who is enrolled in a college or university but does not actively participate in classes or academic activities. However, these new ghosts are aliases or stolen identities used by scammers and the bots they deploy to get accepted to a college, but not for the purpose of attending classes or earning a degree. Why? What's the scam?